#Postgresql Optimization

Explore tagged Tumblr posts

Text

ColdFusion with PostgreSQL: Optimizing Performance for Advanced SQL Features

#ColdFusion with PostgreSQL: Optimizing Performance for Advanced SQL Features#ColdFusion with PostgreSQL: Performance for Advanced SQL Features#ColdFusion with PostgreSQL

0 notes

Text

Thingworx PostgreSQL Database Analysis and Cleanup

Introduction: In the context of Thingworx, maintaining a clean and optimized PostgreSQL database is crucial for ensuring optimal performance and reliability. Analyzing and cleaning up the database periodically can help identify and address issues related to data growth, performance bottlenecks, and disk space utilization. Query for Analyzing Table Sizes: To identify tables occupying the most…

View On WordPress

0 notes

Text

This Week in Rust 593

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @ThisWeekInRust on X (formerly Twitter) or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Newsletters

The Embedded Rustacean Issue #42

This Week in Bevy - 2025-03-31

Project/Tooling Updates

Fjall 2.8

EtherCrab, the pure Rust EtherCAT MainDevice, version 0.6 released

A process for handling Rust code in the core kernel

api-version: axum middleware for header based version selection

SALT: a VS Code Extension, seeking participants in a study on Rust usabilty

Observations/Thoughts

Introducing Stringleton

Rust Any Part 3: Finally we have Upcasts

Towards fearless SIMD, 7 years later

LLDB's TypeSystems: An Unfinished Interface

Mutation Testing in Rust

Embedding shared objects in Rust

Rust Walkthroughs

Architecting and building medium-sized web services in Rust with Axum, SQLx and PostgreSQL

Solving the ABA Problem in Rust with Hazard Pointers

Building a CoAP application on Ariel OS

How to Optimize your Rust Program for Slowness: Write a Short Program That Finishes After the Universe Dies

Inside ScyllaDB Rust Driver 1.0: A Fully Async Shard-Aware CQL Driver Using Tokio

Building a search engine from scratch, in Rust: part 2

Introduction to Monoio: A High-Performance Rust Runtime

Getting started with Rust on Google Cloud

Miscellaneous

An AlphaStation's SROM

Real-World Verification of Software for Cryptographic Applications

Public mdBooks

[video] Networking in Bevy with ECS replication - Hennadii

[video] Intermediate Representations for Reactive Structures - Pete

Crate of the Week

This week's crate is candystore, a fast, persistent key-value store that does not require LSM or WALs.

Thanks to Tomer Filiba for the self-suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization.

If you are a feature implementer and would like your RFC to appear in this list, add a call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

No calls for testing were issued this week by Rust, Rust language RFCs or Rustup.

Let us know if you would like your feature to be tracked as a part of this list.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

* Rust Conf 2025 Call for Speakers | Closes 2025-04-29 11:59 PM PDT | Seattle, WA, US | 2025-09-02 - 2025-09-05

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

Updates from the Rust Project

438 pull requests were merged in the last week

Compiler

allow defining opaques in statics and consts

avoid wrapping constant allocations in packed structs when not necessary

perform less decoding if it has the same syntax context

stabilize precise_capturing_in_traits

uplift clippy::invalid_null_ptr_usage lint as invalid_null_arguments

Library

allow spawning threads after TLS destruction

override PartialOrd methods for bool

simplify expansion for format_args!()

stabilize const_cell

Rustdoc

greatly simplify doctest parsing and information extraction

rearrange Item/ItemInner

Clippy

new lint: char_indices_as_byte_indices

add manual_dangling_ptr lint

respect #[expect] and #[allow] within function bodies for missing_panics_doc

do not make incomplete or invalid suggestions

do not warn about shadowing in a destructuring assigment

expand obfuscated_if_else to support {then(), then_some()}.unwrap_or_default()

fix the primary span of redundant_pub_crate when flagging nameless items

fix option_if_let_else suggestion when coercion requires explicit cast

fix unnested_or_patterns suggestion in let

make collapsible_if recognize the let_chains feature

make missing_const_for_fn operate on non-optimized MIR

more natural suggestions for cmp_owned

collapsible_if: prevent including preceeding whitespaces if line contains non blanks

properly handle expansion in single_match

validate paths in disallowed_* configurations

Rust-Analyzer

allow crate authors to control completion of their things

avoid relying on block_def_map() needlessly

fix debug sourceFileMap when using cppvsdbg

fix format_args lowering using wrong integer suffix

fix a bug in orphan rules calculation

fix panic in progress due to splitting unicode incorrectly

use medium durability for crate-graph changes, high for library source files

Rust Compiler Performance Triage

Positive week, with a lot of primary improvements and just a few secondary regressions. Single big regression got reverted.

Triage done by @panstromek. Revision range: 4510e86a..2ea33b59

Summary:

(instructions:u) mean range count Regressions ❌ (primary) - - 0 Regressions ❌ (secondary) 0.9% [0.2%, 1.5%] 17 Improvements ✅ (primary) -0.4% [-4.5%, -0.1%] 136 Improvements ✅ (secondary) -0.6% [-3.2%, -0.1%] 59 All ❌✅ (primary) -0.4% [-4.5%, -0.1%] 136

Full report here.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

Tracking Issues & PRs

Rust

Tracking Issue for slice::array_chunks

Stabilize cfg_boolean_literals

Promise array::from_fn is generated in order of increasing indices

Stabilize repr128

Stabilize naked_functions

Fix missing const for inherent pointer replace methods

Rust RFCs

core::marker::NoCell in bounds (previously known an [sic] Freeze)

Cargo,

Stabilize automatic garbage collection.

Other Areas

No Items entered Final Comment Period this week for Language Team, Language Reference or Unsafe Code Guidelines.

Let us know if you would like your PRs, Tracking Issues or RFCs to be tracked as a part of this list.

New and Updated RFCs

Allow &&, ||, and ! in cfg

Upcoming Events

Rusty Events between 2025-04-02 - 2025-04-30 🦀

Virtual

2025-04-02 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2025-04-03 | Virtual (Nürnberg, DE) | Rust Nurnberg DE

Rust Nürnberg online

2025-04-03 | Virtual | Ardan Labs

Communicate with Channels in Rust

2025-04-05 | Virtual (Kampala, UG) | Rust Circle Meetup

Rust Circle Meetup

2025-04-08 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Second Tuesday

2025-04-10 | Virtual (Berlin, DE) | Rust Berlin

Rust Hack and Learn

2025-04-15 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2025-04-16 | Virtual (Vancouver, BC, CA) | Vancouver Rust

Rust Study/Hack/Hang-out

2025-04-17 | Virtual and In-Person (Redmond, WA, US) | Seattle Rust User Group

April, 2025 SRUG (Seattle Rust User Group) Meetup

2025-04-22 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Fourth Tuesday

2025-04-23 | Virtual (Cardiff, UK) | Rust and C++ Cardiff

**Beyond embedded - OS development in Rust **

2025-04-24 | Virtual (Berlin, DE) | Rust Berlin

Rust Hack and Learn

2025-04-24 | Virtual (Charlottesville, VA, US) | Charlottesville Rust Meetup

Part 2: Quantum Computers Can’t Rust-Proof This!"

Asia

2025-04-05 | Bangalore/Bengaluru, IN | Rust Bangalore

April 2025 Rustacean meetup

2025-04-22 | Tel Aviv-Yafo, IL | Rust 🦀 TLV

In person Rust April 2025 at Braavos in Tel Aviv in collaboration with StarkWare

Europe

2025-04-02 | Cambridge, UK | Cambridge Rust Meetup

Monthly Rust Meetup

2025-04-02 | Köln, DE | Rust Cologne

Rust in April: Rust Embedded, Show and Tell

2025-04-02 | München, DE | Rust Munich

Rust Munich 2025 / 1 - hybrid

2025-04-02 | Oxford, UK | Oxford Rust Meetup Group

Oxford Rust and C++ social

2025-04-02 | Stockholm, SE | Stockholm Rust

Rust Meetup @Funnel

2025-04-03 | Oslo, NO | Rust Oslo

Rust Hack'n'Learn at Kampen Bistro

2025-04-08 | Olomouc, CZ | Rust Moravia

3. Rust Moravia Meetup (Real Embedded Rust)

2025-04-09 | Girona, ES | Rust Girona

Rust Girona Hack & Learn 04 2025

2025-04-09 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup

2025-04-10 | Karlsruhe, DE | Rust Hack & Learn Karlsruhe

Karlsruhe Rust Hack and Learn Meetup bei BlueYonder

2025-04-15 | Leipzig, DE | Rust - Modern Systems Programming in Leipzig

Topic TBD

2025-04-15 | London, UK | Women in Rust

WIR x WCC: Finding your voice in Tech

2025-04-19 | Istanbul, TR | Türkiye Rust Community

Rust Konf Türkiye

2025-04-23 | London, UK | London Rust Project Group

Fusing Python with Rust using raw C bindings

2025-04-24 | Aarhus, DK | Rust Aarhus

Talk Night at MFT Energy

2025-04-24 | Edinburgh, UK | Rust and Friends

Rust and Friends (evening pub)

2025-04-24 | Manchester, UK | Rust Manchester

Rust Manchester April Code Night

2025-04-25 | Edinburgh, UK | Rust and Friends

Rust and Friends (daytime coffee)

2025-04-29 | Paris, FR | Rust Paris

Rust meetup #76

North America

2025-04-03 | Chicago, IL, US | Chicago Rust Meetup

Rust Happy Hour

2025-04-03 | Montréal, QC, CA | Rust Montréal

April Monthly Social

2025-04-03 | Saint Louis, MO, US | STL Rust

icu4x - resource-constrained internationalization (i18n)

2025-04-06 | Boston, MA, US | Boston Rust Meetup

Kendall Rust Lunch, Apr 6

2025-04-08 | New York, NY, US | Rust NYC

Rust NYC: Building a full-text search Postgres extension in Rust

2025-04-10 | Portland, OR, US | PDXRust

TetaNES: A Vaccination for Rust—No Needle, Just the Borrow Checker

2025-04-14 | Boston, MA, US | Boston Rust Meetup

Coolidge Corner Brookline Rust Lunch, Apr 14

2025-04-17 | Nashville, TN, US | Music City Rust Developers

Using Rust For Web Series 1 : Why HTMX Is Bad

2025-04-17 | Redmond, WA, US | Seattle Rust User Group

April, 2025 SRUG (Seattle Rust User Group) Meetup

2025-04-23 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2025-04-25 | Boston, MA, US | Boston Rust Meetup

Ball Square Rust Lunch, Apr 25

Oceania

2025-04-09 | Sydney, NS, AU | Rust Sydney

Crab 🦀 X 🕳️🐇

2025-04-14 | Christchurch, NZ | Christchurch Rust Meetup Group

Christchurch Rust Meetup

2025-04-22 | Barton, AC, AU | Canberra Rust User Group

April Meetup

South America

2025-04-03 | Buenos Aires, AR | Rust en Español

Abril - Lambdas y más!

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

If you write a bug in your Rust program, Rust doesn’t blame you. Rust asks “how could the compiler have spotted that bug”.

– Ian Jackson blogging about Rust

Despite a lack of suggestions, llogiq is quite pleased with his choice.

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, U007D, joelmarcey, mariannegoldin, bennyvasquez, bdillo

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Text

Why Tableau is Essential in Data Science: Transforming Raw Data into Insights

Data science is all about turning raw data into valuable insights. But numbers and statistics alone don’t tell the full story—they need to be visualized to make sense. That’s where Tableau comes in.

Tableau is a powerful tool that helps data scientists, analysts, and businesses see and understand data better. It simplifies complex datasets, making them interactive and easy to interpret. But with so many tools available, why is Tableau a must-have for data science? Let’s explore.

1. The Importance of Data Visualization in Data Science

Imagine you’re working with millions of data points from customer purchases, social media interactions, or financial transactions. Analyzing raw numbers manually would be overwhelming.

That’s why visualization is crucial in data science:

Identifies trends and patterns – Instead of sifting through spreadsheets, you can quickly spot trends in a visual format.

Makes complex data understandable – Graphs, heatmaps, and dashboards simplify the interpretation of large datasets.

Enhances decision-making – Stakeholders can easily grasp insights and make data-driven decisions faster.

Saves time and effort – Instead of writing lengthy reports, an interactive dashboard tells the story in seconds.

Without tools like Tableau, data science would be limited to experts who can code and run statistical models. With Tableau, insights become accessible to everyone—from data scientists to business executives.

2. Why Tableau Stands Out in Data Science

A. User-Friendly and Requires No Coding

One of the biggest advantages of Tableau is its drag-and-drop interface. Unlike Python or R, which require programming skills, Tableau allows users to create visualizations without writing a single line of code.

Even if you’re a beginner, you can:

✅ Upload data from multiple sources

✅ Create interactive dashboards in minutes

✅ Share insights with teams easily

This no-code approach makes Tableau ideal for both technical and non-technical professionals in data science.

B. Handles Large Datasets Efficiently

Data scientists often work with massive datasets—whether it’s financial transactions, customer behavior, or healthcare records. Traditional tools like Excel struggle with large volumes of data.

Tableau, on the other hand:

Can process millions of rows without slowing down

Optimizes performance using advanced data engine technology

Supports real-time data streaming for up-to-date analysis

This makes it a go-to tool for businesses that need fast, data-driven insights.

C. Connects with Multiple Data Sources

A major challenge in data science is bringing together data from different platforms. Tableau seamlessly integrates with a variety of sources, including:

Databases: MySQL, PostgreSQL, Microsoft SQL Server

Cloud platforms: AWS, Google BigQuery, Snowflake

Spreadsheets and APIs: Excel, Google Sheets, web-based data sources

This flexibility allows data scientists to combine datasets from multiple sources without needing complex SQL queries or scripts.

D. Real-Time Data Analysis

Industries like finance, healthcare, and e-commerce rely on real-time data to make quick decisions. Tableau’s live data connection allows users to:

Track stock market trends as they happen

Monitor website traffic and customer interactions in real time

Detect fraudulent transactions instantly

Instead of waiting for reports to be generated manually, Tableau delivers insights as events unfold.

E. Advanced Analytics Without Complexity

While Tableau is known for its visualizations, it also supports advanced analytics. You can:

Forecast trends based on historical data

Perform clustering and segmentation to identify patterns

Integrate with Python and R for machine learning and predictive modeling

This means data scientists can combine deep analytics with intuitive visualization, making Tableau a versatile tool.

3. How Tableau Helps Data Scientists in Real Life

Tableau has been adopted by the majority of industries to make data science more impactful and accessible. This is applied in the following real-life scenarios:

A. Analytics for Health Care

Tableau is deployed by hospitals and research institutions for the following purposes:

Monitor patient recovery rates and predict outbreaks of diseases

Analyze hospital occupancy and resource allocation

Identify trends in patient demographics and treatment results

B. Finance and Banking

Banks and investment firms rely on Tableau for the following purposes:

✅ Detect fraud by analyzing transaction patterns

✅ Track stock market fluctuations and make informed investment decisions

✅ Assess credit risk and loan performance

C. Marketing and Customer Insights

Companies use Tableau to:

✅ Track customer buying behavior and personalize recommendations

✅ Analyze social media engagement and campaign effectiveness

✅ Optimize ad spend by identifying high-performing channels

D. Retail and Supply Chain Management

Retailers leverage Tableau to:

✅ Forecast product demand and adjust inventory levels

✅ Identify regional sales trends and adjust marketing strategies

✅ Optimize supply chain logistics and reduce delivery delays

These applications show why Tableau is a must-have for data-driven decision-making.

4. Tableau vs. Other Data Visualization Tools

There are many visualization tools available, but Tableau consistently ranks as one of the best. Here’s why:

Tableau vs. Excel – Excel struggles with big data and lacks interactivity; Tableau handles large datasets effortlessly.

Tableau vs. Power BI – Power BI is great for Microsoft users, but Tableau offers more flexibility across different data sources.

Tableau vs. Python (Matplotlib, Seaborn) – Python libraries require coding skills, while Tableau simplifies visualization for all users.

This makes Tableau the go-to tool for both beginners and experienced professionals in data science.

5. Conclusion

Tableau has become an essential tool in data science because it simplifies data visualization, handles large datasets, and integrates seamlessly with various data sources. It enables professionals to analyze, interpret, and present data interactively, making insights accessible to everyone—from data scientists to business leaders.

If you’re looking to build a strong foundation in data science, learning Tableau is a smart career move. Many data science courses now include Tableau as a key skill, as companies increasingly demand professionals who can transform raw data into meaningful insights.

In a world where data is the driving force behind decision-making, Tableau ensures that the insights you uncover are not just accurate—but also clear, impactful, and easy to act upon.

#data science course#top data science course online#top data science institute online#artificial intelligence course#deepseek#tableau

3 notes

·

View notes

Text

$AIGRAM - your AI assistant for Telegram data

Introduction

$AIGRAM is an AI-powered platform designed to help users discover and organize Telegram channels and groups more effectively. By leveraging advanced technologies such as natural language processing, semantic search, and machine learning, AIGRAM enhances the way users explore content on Telegram.

With deep learning algorithms, AIGRAM processes large amounts of data to deliver precise and relevant search results, making it easier to find the right communities. The platform seamlessly integrates with Telegram, supporting better connections and collaboration. Built with scalability in mind, AIGRAM is cloud-based and API-driven, offering a reliable and efficient tool to optimize your Telegram experience.

Tech Stack

AIGRAM uses a combination of advanced AI, scalable infrastructure, and modern tools to deliver its Telegram search and filtering features.

AI & Machine Learning:

NLP: Transformer models like BERT, GPT for understanding queries and content. Machine Learning: Algorithms for user behavior and query optimization. Embeddings: Contextual vectorization (word2vec, FAISS) for semantic search. Recommendation System: AI-driven suggestions for channels and groups.

Backend:

Languages: Python (AI models), Node.js (API). Databases: PostgreSQL, Elasticsearch (search), Redis (caching). API Frameworks: FastAPI, Express.js.

Frontend:

Frameworks: React.js, Material-UI, Redux for state management.

This tech stack powers AIGRAM’s high-performance, secure, and scalable platform.

Mission

AIGRAM’s mission is to simplify the trading experience for memecoin traders on the Solana blockchain. Using advanced AI technologies, AIGRAM helps traders easily discover, filter, and engage with the most relevant Telegram groups and channels.

With the speed of Solana and powerful search features, AIGRAM ensures traders stay ahead in the fast-paced memecoin market. Our platform saves time, provides clarity, and turns complex information into valuable insights.

We aim to be the go-to tool for Solana traders, helping them make better decisions and maximize their success.

Our socials:

Website - https://aigram.software/ Gitbook - https://aigram-1.gitbook.io/ X - https://x.com/aigram_software Dex - https://dexscreener.com/solana/baydg5htursvpw2y2n1pfrivoq9rwzjjptw9w61nm25u

2 notes

·

View notes

Text

More Progress, More Steps to Do

Day 255 - Jul 17th, 12.024

Made some big progress on the test, and now I'm able to import and send all 70 thousand rows to a PostgreSQL database, creating the necessary tables as necessary, in some 20 seconds. It's not the best time I would say, but I don't know how to optimize the code yet, and since I still need to do an API and hopefully some front-end to fetch and search this data. However, I don't really know how they want this API exactly to be, so until I get an answer, I will try to refactor the code to make it easier to change if necessary.

Today's artists & creative things Music: Novocaine - by The Unlikely Candidates

© 2024 Gustavo "Guz" L. de Mello. Licensed under CC BY-SA 4.0

2 notes

·

View notes

Text

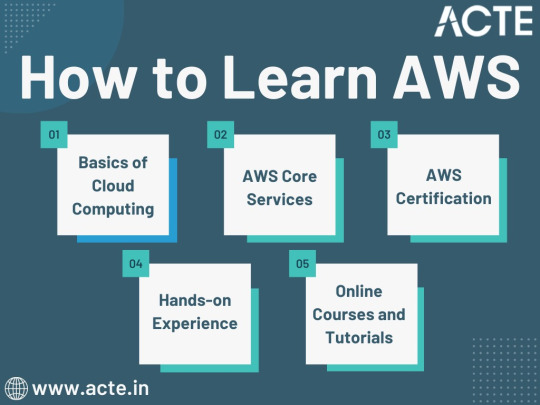

Journey to AWS Proficiency: Unveiling Core Services and Certification Paths

Amazon Web Services, often referred to as AWS, stands at the forefront of cloud technology and has revolutionized the way businesses and individuals leverage the power of the cloud. This blog serves as your comprehensive guide to understanding AWS, exploring its core services, and learning how to master this dynamic platform. From the fundamentals of cloud computing to the hands-on experience of AWS services, we'll cover it all. Additionally, we'll discuss the role of education and training, specifically highlighting the value of ACTE Technologies in nurturing your AWS skills, concluding with a mention of their AWS courses.

The Journey to AWS Proficiency:

1. Basics of Cloud Computing:

Getting Started: Before diving into AWS, it's crucial to understand the fundamentals of cloud computing. Begin by exploring the three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Gain a clear understanding of what cloud computing is and how it's transforming the IT landscape.

Key Concepts: Delve into the key concepts and advantages of cloud computing, such as scalability, flexibility, cost-effectiveness, and disaster recovery. Simultaneously, explore the potential challenges and drawbacks to get a comprehensive view of cloud technology.

2. AWS Core Services:

Elastic Compute Cloud (EC2): Start your AWS journey with Amazon EC2, which provides resizable compute capacity in the cloud. Learn how to create virtual servers, known as instances, and configure them to your specifications. Gain an understanding of the different instance types and how to deploy applications on EC2.

Simple Storage Service (S3): Explore Amazon S3, a secure and scalable storage service. Discover how to create buckets to store data and objects, configure permissions, and access data using a web interface or APIs.

Relational Database Service (RDS): Understand the importance of databases in cloud applications. Amazon RDS simplifies database management and maintenance. Learn how to set up, manage, and optimize RDS instances for your applications. Dive into database engines like MySQL, PostgreSQL, and more.

3. AWS Certification:

Certification Paths: AWS offers a range of certifications for cloud professionals, from foundational to professional levels. Consider enrolling in certification courses to validate your knowledge and expertise in AWS. AWS Certified Cloud Practitioner, AWS Certified Solutions Architect, and AWS Certified DevOps Engineer are some of the popular certifications to pursue.

Preparation: To prepare for AWS certifications, explore recommended study materials, practice exams, and official AWS training. ACTE Technologies, a reputable training institution, offers AWS certification training programs that can boost your confidence and readiness for the exams.

4. Hands-on Experience:

AWS Free Tier: Register for an AWS account and take advantage of the AWS Free Tier, which offers limited free access to various AWS services for 12 months. Practice creating instances, setting up S3 buckets, and exploring other services within the free tier. This hands-on experience is invaluable in gaining practical skills.

5. Online Courses and Tutorials:

Learning Platforms: Explore online learning platforms like Coursera, edX, Udemy, and LinkedIn Learning. These platforms offer a wide range of AWS courses taught by industry experts. They cover various AWS services, architecture, security, and best practices.

Official AWS Resources: AWS provides extensive online documentation, whitepapers, and tutorials. Their website is a goldmine of information for those looking to learn more about specific AWS services and how to use them effectively.

Amazon Web Services (AWS) represents an exciting frontier in the realm of cloud computing. As businesses and individuals increasingly rely on the cloud for innovation and scalability, AWS stands as a pivotal platform. The journey to AWS proficiency involves grasping fundamental cloud concepts, exploring core services, obtaining certifications, and acquiring practical experience. To expedite this process, online courses, tutorials, and structured training from renowned institutions like ACTE Technologies can be invaluable. ACTE Technologies' comprehensive AWS training programs provide hands-on experience, making your quest to master AWS more efficient and positioning you for a successful career in cloud technology.

8 notes

·

View notes

Text

5 useful tools for engineers! Introducing recommendations to improve work efficiency

Engineers have to do a huge amount of coding. It’s really tough having to handle other duties and schedule management at the same time. Having the right tools is key to being a successful engineer.

Here are some tools that will help you improve your work efficiency.

1.SourceTree

“SourceTree” is free Git client software provided by Atlassian. It is a tool for source code management and version control for developers and teams using the version control system called Git. When developers and teams use Git to manage projects, it supports efficient development work by providing a visualized interface and rich functionality.

2.Charles

“Charles” is an HTTP proxy tool for web development and debugging, and a debugging proxy tool for capturing HTTP and HTTPS traffic, visualizing and analyzing communication between networks. This allows web developers and system administrators to observe requests and responses for debugging, testing, performance optimization, and more.

3.iTerm2

“iTerm2” is a highly functional terminal emulator for macOS, and is an application that allows terminal operations to be performed more comfortably and efficiently. It offers more features than the standard Terminal application. It has rich features such as tab splitting, window splitting, session management, customizable appearance, and script execution.

4.Navicat

Navicat is an integrated tool for performing database management and development tasks and supports many major database systems (MySQL, PostgreSQL, SQLite, Oracle, SQL Server, etc.). Using Navicat, you can efficiently perform tasks such as database structure design, data editing and management, SQL query execution, data modeling, backup and restore.

5.CodeLF

CodeLF (Code Language Framework) is a tool designed to help find, navigate, and understand code within large source code bases. Key features include finding and querying symbols such as functions, variables, and classes in your codebase, viewing code snippets, and visualizing relationships between code. It can aid in efficient code navigation and understanding, increasing productivity in the development process.

2 notes

·

View notes

Text

The Ultimate Guide to Web Development

In today’s digital age, having a strong online presence is crucial for individuals and businesses alike. Whether you’re a seasoned developer or a newcomer to the world of coding, mastering the art of web development opens up a world of opportunities. In this comprehensive guide, we’ll delve into the intricate world of web development, exploring the fundamental concepts, tools, and techniques needed to thrive in this dynamic field. Join us on this journey as we unlock the secrets to creating stunning websites and robust web applications.

Understanding the Foundations

At the core of every successful website lies a solid foundation built upon key principles and technologies. The Ultimate Guide to Web Development begins with an exploration of HTML, CSS, and JavaScript — the building blocks of the web. HTML provides the structure, CSS adds style and aesthetics, while JavaScript injects interactivity and functionality. Together, these three languages form the backbone of web development, empowering developers to craft captivating user experiences.

Collaborating with a Software Development Company in USA

For businesses looking to build robust web applications or enhance their online presence, collaborating with a Software Development Company in USA can be invaluable. These companies offer expertise in a wide range of technologies and services, from custom software development to web design and digital marketing. By partnering with a reputable company, businesses can access the skills and resources needed to bring their vision to life and stay ahead of the competition in today’s digital landscape.

Exploring the Frontend

Once you’ve grasped the basics, it’s time to delve deeper into the frontend realm. From responsive design to user interface (UI) development, there’s no shortage of skills to master. CSS frameworks like Bootstrap and Tailwind CSS streamline the design process, allowing developers to create visually stunning layouts with ease. Meanwhile, JavaScript libraries such as React, Angular, and Vue.js empower developers to build dynamic and interactive frontend experiences.

Embracing Backend Technologies

While the frontend handles the visual aspect of a website, the backend powers its functionality behind the scenes. In this section of The Ultimate Guide to Web Development, we explore the world of server-side programming and database management. Popular backend languages like Python, Node.js, and Ruby on Rails enable developers to create robust server-side applications, while databases such as MySQL, MongoDB, and PostgreSQL store and retrieve data efficiently.

Mastering Full-Stack Development

With a solid understanding of both frontend and backend technologies, aspiring developers can embark on the journey of full-stack development as a Software Development company in USA. Combining the best of both worlds, full-stack developers possess the skills to build end-to-end web solutions from scratch. Whether it’s creating RESTful APIs, integrating third-party services, or optimizing performance, mastering full-stack development opens doors to endless possibilities in the digital landscape.

Optimizing for Performance and Accessibility

In today’s fast-paced world, users expect websites to load quickly and perform seamlessly across all devices. As such, optimizing performance and ensuring accessibility are paramount considerations for web developers. From minimizing file sizes and leveraging caching techniques to adhering to web accessibility standards such as WCAG (Web Content Accessibility Guidelines), every aspect of development plays a crucial role in delivering an exceptional user experience.

Staying Ahead with Emerging Technologies

The field of web development is constantly evolving, with new technologies and trends emerging at a rapid pace. In this ever-changing landscape, staying ahead of the curve is essential for success. Whether it’s adopting progressive web app (PWA) technologies, harnessing the power of machine learning and artificial intelligence, or embracing the latest frontend frameworks, keeping abreast of emerging technologies is key to maintaining a competitive edge.

Collaborating with a Software Development Company in USA

For businesses looking to elevate their online presence, partnering with a reputable software development company in USA can be a game-changer. With a wealth of experience and expertise, these companies offer tailored solutions to meet the unique needs of their clients. Whether it’s custom web development, e-commerce solutions, or enterprise-grade applications, collaborating with a trusted partner ensures seamless execution and unparalleled results.

Conclusion: Unlocking the Potential of Web Development

As we conclude our journey through The Ultimate Guide to Web Development, it’s clear that mastering the art of web development is more than just writing code — it’s about creating experiences that captivate and inspire. Whether you’re a novice coder or a seasoned veteran, the world of web development offers endless opportunities for growth and innovation. By understanding the fundamental principles, embracing emerging technologies, and collaborating with industry experts, you can unlock the full potential of web development and shape the digital landscape for years to come.

2 notes

·

View notes

Text

With SQL Server, Oracle MySQL, MongoDB, and PostgreSQL and more, we are your dedicated partner in managing, optimizing, securing, and supporting your data infrastructure.

For more, visit: https://briskwinit.com/database-services/

4 notes

·

View notes

Text

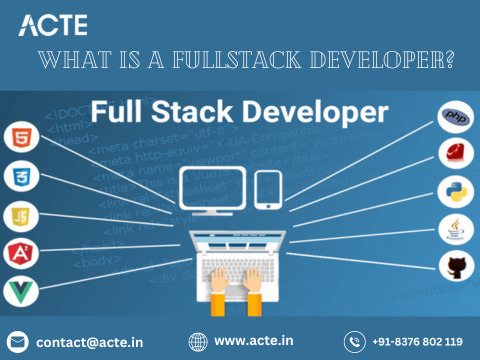

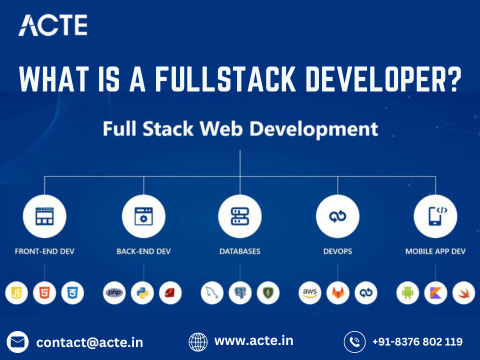

Navigating the Full Stack: A Holistic Approach to Web Development Mastery

Introduction: In the ever-evolving world of web development, full stack developers are the architects behind the seamless integration of frontend and backend technologies. Excelling in both realms is essential for creating dynamic, user-centric web applications. In this comprehensive exploration, we'll embark on a journey through the multifaceted landscape of full stack development, uncovering the intricacies of crafting compelling user interfaces and managing robust backend systems.

Frontend Development: Crafting Engaging User Experiences

1. Markup and Styling Mastery:

HTML (Hypertext Markup Language): Serves as the foundation for structuring web content, providing the framework for user interaction.

CSS (Cascading Style Sheets): Dictates the visual presentation of HTML elements, enhancing the aesthetic appeal and usability of web interfaces.

2. Dynamic Scripting Languages:

JavaScript: Empowers frontend developers to add interactivity and responsiveness to web applications, facilitating seamless user experiences.

Frontend Frameworks and Libraries: Harness the power of frameworks like React, Angular, or Vue.js to streamline development and enhance code maintainability.

3. Responsive Design Principles:

Ensure web applications are accessible and user-friendly across various devices and screen sizes.

Implement responsive design techniques to adapt layout and content dynamically, optimizing user experiences for all users.

4. User-Centric Design Practices:

Employ UX design methodologies to create intuitive interfaces that prioritize user needs and preferences.

Conduct usability testing and gather feedback to refine interface designs and enhance overall user satisfaction.

Backend Development: Managing Data and Logic

1. Server-side Proficiency:

Backend Programming Languages: Utilize languages like Node.js, Python, Ruby, or Java to implement server-side logic and handle client requests.

Server Frameworks and Tools: Leverage frameworks such as Express.js, Django, or Ruby on Rails to expedite backend development and ensure scalability.

2. Effective Database Management:

Relational and Non-relational Databases: Employ databases like MySQL, PostgreSQL, MongoDB, or Firebase to store and manage structured and unstructured data efficiently.

API Development: Design and implement RESTful or GraphQL APIs to facilitate communication between the frontend and backend components of web applications.

3. Security and Performance Optimization:

Implement robust security measures to safeguard user data and protect against common vulnerabilities.

Optimize backend performance through techniques such as caching, query optimization, and load balancing, ensuring optimal application responsiveness.

Full Stack Development: Harmonizing Frontend and Backend

1. Seamless Integration of Technologies:

Cultivate expertise in both frontend and backend technologies to facilitate seamless communication and collaboration across the development stack.

Bridge the gap between user interface design and backend functionality to deliver cohesive and impactful web experiences.

2. Agile Project Management and Collaboration:

Collaborate effectively with cross-functional teams, including designers, product managers, and fellow developers, to plan, execute, and deploy web projects.

Utilize agile methodologies and version control systems like Git to streamline collaboration and track project progress efficiently.

3. Lifelong Learning and Adaptation:

Embrace a growth mindset and prioritize continuous learning to stay abreast of emerging technologies and industry best practices.

Engage with online communities, attend workshops, and pursue ongoing education opportunities to expand skill sets and remain competitive in the evolving field of web development.

Conclusion: Mastering full stack development requires a multifaceted skill set encompassing frontend design principles, backend architecture, and effective collaboration. By embracing a holistic approach to web development, full stack developers can craft immersive user experiences, optimize backend functionality, and navigate the complexities of modern web development with confidence and proficiency.

#full stack developer#education#information#full stack web development#front end development#frameworks#web development#backend#full stack developer course#technology

2 notes

·

View notes

Text

Mastering Fullstack Development: Unifying Frontend and Backend Proficiency

Navigating the dynamic realm of web development necessitates a multifaceted skill set. Enter the realm of fullstack development – a domain where expertise in both frontend and backend intricacies converge seamlessly. In this comprehensive exploration, we'll unravel the intricacies of mastering fullstack development, uncovering the diverse responsibilities, essential skills, and integration strategies that define this pivotal role.

Exploring the Essence of Fullstack Development:

Defining the Role:

Fullstack development epitomizes the fusion of frontend and backend competencies. Fullstack developers are adept at navigating the entire spectrum of web application development, from crafting immersive user interfaces to architecting robust server-side logic and databases.

Unraveling Responsibilities:

Fullstack developers shoulder a dual mandate:

Frontend Proficiency: They meticulously craft captivating user experiences through adept utilization of HTML, CSS, and JavaScript. Leveraging frameworks like React.js, Angular.js, or Vue.js, they breathe life into static interfaces, fostering interactivity and engagement.

Backend Mastery: In the backend realm, fullstack developers orchestrate server-side operations using a diverse array of languages such as JavaScript (Node.js), Python (Django, Flask), Ruby (Ruby on Rails), or Java (Spring Boot). They adeptly handle data management, authentication mechanisms, and business logic, ensuring the seamless functioning of web applications.

Essential Competencies for Fullstack Excellence:

Frontend Prowess:

Frontend proficiency demands a nuanced skill set:

Fundamental Languages: Mastery in HTML, CSS, and JavaScript forms the cornerstone of frontend prowess, enabling the creation of visually appealing interfaces.

Framework Fluency: Familiarity with frontend frameworks like React.js, Angular.js, or Vue.js empowers developers to architect scalable and responsive web solutions.

Design Sensibilities: An understanding of UI/UX principles ensures the delivery of intuitive and aesthetically pleasing user experiences.

Backend Acumen:

Backend proficiency necessitates a robust skill set:

Language Mastery: Proficiency in backend languages such as JavaScript (Node.js), Python (Django, Flask), Ruby (Ruby on Rails), or Java (Spring Boot) is paramount for implementing server-side logic.

Database Dexterity: Fullstack developers wield expertise in database management systems like MySQL, MongoDB, or PostgreSQL, facilitating seamless data storage and retrieval.

Architectural Insight: A comprehension of server architecture and scalability principles underpins the development of robust backend solutions, ensuring optimal performance under varying workloads.

Integration Strategies for Seamless Development:

Harmonizing Databases:

Integrating databases necessitates a strategic approach:

ORM Adoption: Object-Relational Mappers (ORMs) such as Sequelize for Node.js or SQLAlchemy for Python streamline database interactions, abstracting away low-level complexities.

Data Modeling Expertise: Fullstack developers meticulously design database schemas, mirroring the application's data structure and relationships to optimize performance and scalability.

Project Management Paradigms:

End-to-End Execution:

Fullstack developers are adept at steering projects from inception to fruition:

Task Prioritization: They adeptly prioritize tasks based on project requirements and timelines, ensuring the timely delivery of high-quality solutions.

Collaborative Dynamics: Effective communication and collaboration with frontend and backend teams foster synergy and innovation, driving project success.

In essence, mastering fullstack development epitomizes a harmonious blend of frontend finesse and backend mastery, encapsulating the versatility and adaptability essential for thriving in the ever-evolving landscape of web development. As technology continues to evolve, the significance of fullstack developers will remain unparalleled, driving innovation and shaping the digital frontier. Whether embarking on a fullstack journey or harnessing the expertise of fullstack professionals, embracing the ethos of unification and proficiency is paramount for unlocking the full potential of web development endeavors.

#full stack developer#full stack course#full stack training#full stack web development#full stack software developer

2 notes

·

View notes

Text

Navigating the Cloud Landscape: Unleashing Amazon Web Services (AWS) Potential

In the ever-evolving tech landscape, businesses are in a constant quest for innovation, scalability, and operational optimization. Enter Amazon Web Services (AWS), a robust cloud computing juggernaut offering a versatile suite of services tailored to diverse business requirements. This blog explores the myriad applications of AWS across various sectors, providing a transformative journey through the cloud.

Harnessing Computational Agility with Amazon EC2

Central to the AWS ecosystem is Amazon EC2 (Elastic Compute Cloud), a pivotal player reshaping the cloud computing paradigm. Offering scalable virtual servers, EC2 empowers users to seamlessly run applications and manage computing resources. This adaptability enables businesses to dynamically adjust computational capacity, ensuring optimal performance and cost-effectiveness.

Redefining Storage Solutions

AWS addresses the critical need for scalable and secure storage through services such as Amazon S3 (Simple Storage Service) and Amazon EBS (Elastic Block Store). S3 acts as a dependable object storage solution for data backup, archiving, and content distribution. Meanwhile, EBS provides persistent block-level storage designed for EC2 instances, guaranteeing data integrity and accessibility.

Streamlined Database Management: Amazon RDS and DynamoDB

Database management undergoes a transformation with Amazon RDS, simplifying the setup, operation, and scaling of relational databases. Be it MySQL, PostgreSQL, or SQL Server, RDS provides a frictionless environment for managing diverse database workloads. For enthusiasts of NoSQL, Amazon DynamoDB steps in as a swift and flexible solution for document and key-value data storage.

Networking Mastery: Amazon VPC and Route 53

AWS empowers users to construct a virtual sanctuary for their resources through Amazon VPC (Virtual Private Cloud). This virtual network facilitates the launch of AWS resources within a user-defined space, enhancing security and control. Simultaneously, Amazon Route 53, a scalable DNS web service, ensures seamless routing of end-user requests to globally distributed endpoints.

Global Content Delivery Excellence with Amazon CloudFront

Amazon CloudFront emerges as a dynamic content delivery network (CDN) service, securely delivering data, videos, applications, and APIs on a global scale. This ensures low latency and high transfer speeds, elevating user experiences across diverse geographical locations.

AI and ML Prowess Unleashed

AWS propels businesses into the future with advanced machine learning and artificial intelligence services. Amazon SageMaker, a fully managed service, enables developers to rapidly build, train, and deploy machine learning models. Additionally, Amazon Rekognition provides sophisticated image and video analysis, supporting applications in facial recognition, object detection, and content moderation.

Big Data Mastery: Amazon Redshift and Athena

For organizations grappling with massive datasets, AWS offers Amazon Redshift, a fully managed data warehouse service. It facilitates the execution of complex queries on large datasets, empowering informed decision-making. Simultaneously, Amazon Athena allows users to analyze data in Amazon S3 using standard SQL queries, unlocking invaluable insights.

In conclusion, Amazon Web Services (AWS) stands as an all-encompassing cloud computing platform, empowering businesses to innovate, scale, and optimize operations. From adaptable compute power and secure storage solutions to cutting-edge AI and ML capabilities, AWS serves as a robust foundation for organizations navigating the digital frontier. Embrace the limitless potential of cloud computing with AWS – where innovation knows no bounds.

3 notes

·

View notes

Text

Unveiling the Ultimate Handbook for Aspiring Full Stack Developers

In the ever-evolving realm of technology, the role of a full-stack developer has undeniably gained prominence. Full-stack developers epitomize versatility and are an indispensable asset to any enterprise or endeavor. They wield a comprehensive array of competencies that empower them to navigate the intricate landscape of both front-end and back-end web development. In this exhaustive compendium, we shall delve into the intricacies of transforming into a proficient full-stack developer, dissecting the requisite skills, indispensable tools, and strategies for excellence in this domain.

Deciphering the Full Stack Developer Persona

A full-stack developer stands as a connoisseur of both front-end and back-end web development. Their mastery extends across the entire spectrum of web development, rendering them highly coveted entities within the tech sector. The front end of a website is the facet accessible to users, while the back end operates stealthily behind the scenes, handling the intricacies of databases and server management. You can learn it from Uncodemy which is the Best Full stack Developer Institute in Delhi.

The Requisite Competencies

To embark on a successful journey as a full-stack developer, one must amass a diverse skill set. These proficiencies can be broadly categorized into front-end and back-end development, coupled with other quintessential talents:

Front-End Development

Markup Linguistics and Style Sheets: Cultivating an in-depth grasp of markup linguistics and style sheets like HTML and CSS is fundamental to crafting visually captivating and responsive user interfaces.

JavaScript Mastery: JavaScript constitutes the linchpin of front-end development. Proficiency in this language is the linchpin for crafting dynamic web applications.

Frameworks and Libraries: Familiarization with popular front-end frameworks and libraries such as React, Angular, and Vue.js is indispensable as they streamline the development process and elevate the user experience.

Back-End Development

Server-Side Linguistics: Proficiency in server-side languages like Node.js, Python, Ruby, or Java is imperative as these languages fuel the back-end functionalities of websites.

Database Dexterity: Acquiring proficiency in the manipulation of databases, including SQL and NoSQL variants like MySQL, PostgreSQL, and MongoDB, is paramount.

API Expertise: Comprehending the creation and consumption of APIs is essential, serving as the conduit for data interchange between the front-end and back-end facets.

Supplementary Competencies

Version Control Proficiency: Mastery in version control systems such as Git assumes monumental significance for collaborative code management.

Embracing DevOps: Familiarity with DevOps practices is instrumental in automating and streamlining the development and deployment processes.

Problem-Solving Prowess: Full-stack developers necessitate robust problem-solving acumen to diagnose issues and optimize code for enhanced efficiency.

The Instruments of the Craft

Full-stack developers wield an arsenal of tools and technologies to conceive, validate, and deploy web applications. The following are indispensable tools that merit assimilation:

Integrated Development Environments (IDEs)

Visual Studio Code: This open-source code editor, hailed for its customizability, enjoys widespread adoption within the development fraternity.

Sublime Text: A lightweight and efficient code editor replete with an extensive repository of extensions.

Version Control

Git: As the preeminent version control system, Git is indispensable for tracking code modifications and facilitating collaborative efforts.

GitHub: A web-based platform dedicated to hosting Git repositories and fostering collaboration among developers.

Front-End Frameworks

React A potent JavaScript library for crafting user interfaces with finesse.

Angular: A comprehensive front-end framework catering to the construction of dynamic web applications.

Back-End Technologies

Node.js: A favored server-side runtime that facilitates the development of scalable, high-performance applications.

Express.js: A web application framework tailor-made for Node.js, simplifying back-end development endeavors.

Databases

MongoDB: A NoSQL database perfectly suited for managing copious amounts of unstructured data.

PostgreSQL: A potent open-source relational database management system.

Elevating Your Proficiency as a Full-Stack Developer

True excellence as a full-stack developer transcends mere technical acumen. Here are some strategies to help you distinguish yourself in this competitive sphere:

Continual Learning: Given the rapid evolution of technology, it's imperative to remain abreast of the latest trends and tools.

Embark on Personal Projects: Forge your path by creating bespoke web applications to showcase your skills and amass a portfolio.

Collaboration and Networking: Participation in developer communities, attendance at conferences, and collaborative ventures with fellow professionals are key to growth.

A Problem-Solving Mindset: Cultivate a robust ability to navigate complex challenges and optimize code for enhanced efficiency.

Embracing Soft Skills: Effective communication, collaborative teamwork, and adaptability are indispensable in a professional milieu.

In Closing

Becoming a full-stack developer is a gratifying odyssey that demands unwavering dedication and a resolute commitment to perpetual learning. Armed with the right skill set, tools, and mindset, one can truly shine in this dynamic domain. Full-stack developers are in high demand, and as you embark on this voyage, you'll discover a plethora of opportunities beckoning you.

So, if you aspire to join the echelons of full-stack developers and etch your name in the annals of the tech world, commence your journey by honing your skills and laying a robust foundation in both front-end and back-end development. Your odyssey to becoming an adept full-stack developer commences now.

5 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker: An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

4 notes

·

View notes